Despite social media networks’ best efforts to eradicate them, porn bots remain essentially a part of the experience. We’re used to seeing them all over memes and celebrity posts’ comments sections, and if you have a public account, you’ve undoubtedly seen them following and enjoying your stories. However, in order to elude automatic filters, their behavior is always shifting, and things are already getting strange.

The strategy used by porn bots these days is a little more abstract than it was in the past, when they would often try to draw users in with suggestive or even outright filthy hook lines (such as the ever-popular “DON’T LOOK at my STORY, if you don’t want to MASTURBATE!”). Bot accounts frequently publish a single, inoffensive, totally unrelated word to the topic, perhaps with one or two emojis. Five different spam accounts, all using the same profile picture—a close-up of a person spreading their assholes in a red thong—commented on a post I recently came across with the following messages: “Pristine 🌿,” “Music 🎶,” “Sapphire 💙,” “Serenity 😌,” and “Faith 🙏.”

Another bot left a remark on the same meme post, “Michigan 🌼,” with a headless frontal view of someone’s lingerie-clad body as its profile picture. As soon as you become aware of them, it’s difficult to resist mentally cataloging the most absurd occurrences. 🦄agriculture, wrote a bot. Regarding a different post: “insect 🙈and” “terror 🌟.” The odd one-word remarks are all over the place; it appears like the porn bots have gone utterly insane.

Actually, what we’re witnessing is the introduction of yet another avoidance tactic used by fraudsters to make their bots evade Meta’s detection systems. In addition to that, they may be growing a bit lethargic.

According to research engineer Satnam Narang of Tenable, a cybersecurity business, “they just want to get into the conversation, so having to craft a coherent sentence probably doesn’t make sense for them.” Narang spoke with Engadget. Narang, who has been looking into social media frauds since the MySpace days, says that once scammers get their bots into the mix, they may have additional bots like those comments to further boost them.

Scammers might evade moderators who might be searching for certain keywords by using random terms. They have previously experimented with techniques such as inserting spaces or other characters in between each letter of words that the algorithm could flag. Because the words “insect” and “terror” are so innocuous, Narang stated that you cannot necessarily prohibit or remove an account for using them. But if they say something like, ‘Check my tale,’ it may cause their systems to raise an alert. They are using an evasion tactic, and if you can see them on these well-known accounts, it must be effective. It is only a component of that dance.

That dance is one social media platforms and bots have been doing for years, seemingly to no end. Meta has said it stops millions of fake accounts from being created on a daily basis across its suite of apps, and catches “millions more, often within minutes after creation.” Yet spam accounts are still prevalent enough to show up in droves on high traffic posts and slip into the story views of even users with small followings.

The company’s most recent transparency report, which includes stats on fake accounts it’s removed, shows Facebook nixed over a billion fake accounts last year alone, but currently offers no data for Instagram. “Spammers use every platform available to them to deceive and manipulate people across the internet and constantly adapt their tactics to evade enforcement,” a Meta spokesperson said. “That is why we invest heavily in our enforcement and review teams, and have specialized detection tools to identify spam.”

Instagram released a number of tools in December of last year with the goal of providing users greater insight into the platform’s spam bot policies and granting content producers greater authority over their interactions with these accounts. For instance, account holders may now bulk remove follow requests from profiles that have been reported as possibly spam. Instagram users could have also noticed that the “hidden comments” area, which appears more frequently at the bottom of some posts and can be used to downgrade comments that have been reported as inflammatory or spam to reduce interactions with them.

Scammers are winning in this game of “whack-a-mole,” according to Narang. “Just when you think you’ve got it, it seems to appear somewhere else.” According to him, con artists are skilled at determining the reason behind their bans and devising new strategies to evade discovery.

It could be assumed that social media users nowadays would be too intelligent to fall for blatantly automated remarks like “Michigan 🌖,” but fraudsters don’t always need to fool unsuspecting victims into parting with their money, as Narang points out. They frequently take part in affiliate programs; all they have to do is get visitors to visit a website and join up for free, commonly labeled as a “adult dating service” or something similar. The “link in bio” that the bots provide usually takes users to an intermediate website that hosts a few URLs that potentially go to the service in issue and offer XXX conversations or photographs.

For each legitimate person who creates an account, scammers might receive a tiny sum of money, perhaps one or two dollars. Should an individual register with a credit card, the rebate would be far larger. “You’re making some money even if one percent of [the target demographic] signs up,” Narang stated. Additionally, “you’re probably making a decent chunk of change if you’re running multiple, different accounts and you have different profiles pushing these links out.” According to Narang, spam bots used by Instagram fraudsters are probably also present on TikTok, X, and other websites. “Everything adds up.”

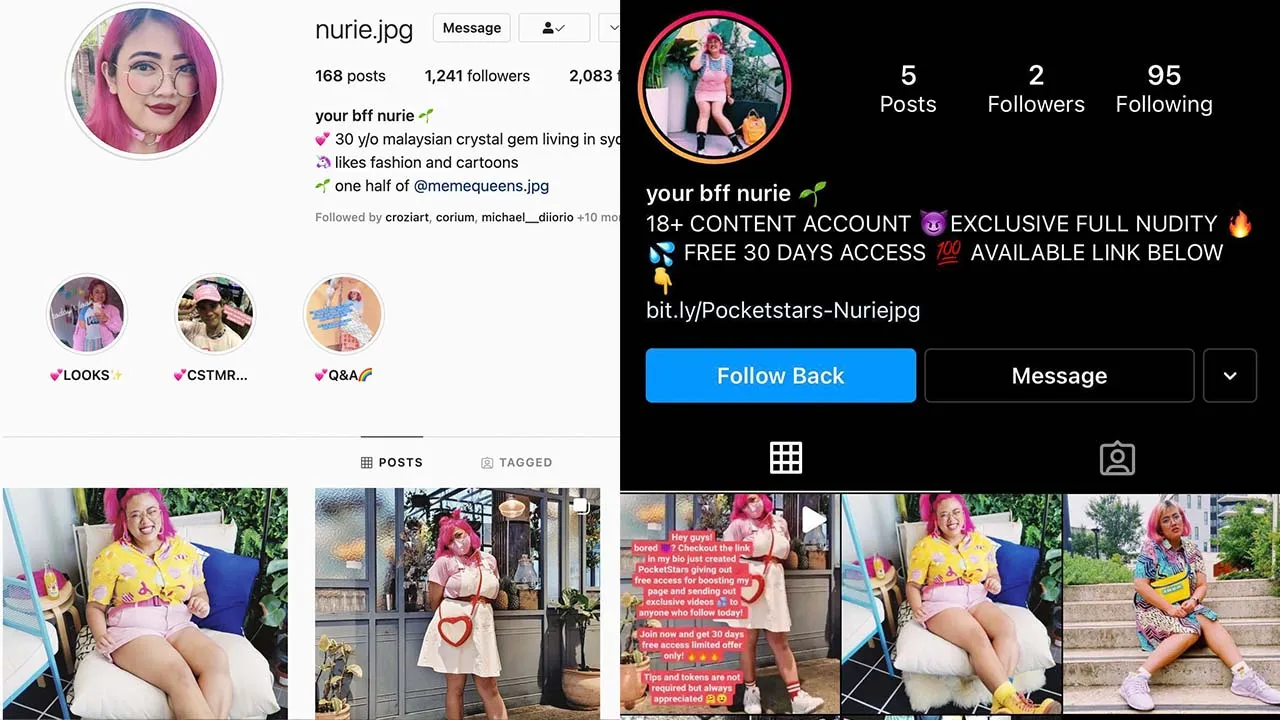

Spam bots are harmful in ways that go beyond the troubles they may eventually provide the few people who have been tricked into signing up for a dubious service. Porn bots usually utilize genuine people’s images that they have stolen from public profiles. This may be humiliating since, as I can attest from personal experience, once the spam account begins friend-requesting everyone it depicts, it can be quite awkward. Getting Meta to delete these cloned accounts might be a laborious procedure.

Their presence further exacerbates the difficulties faced by actual content creators in the sex and related sectors on social media, which many depend on as a means of reaching a larger audience but have to battle all the time to avoid being taken down from platforms. Fake Instagram accounts have the ability to gain thousands of followers, which detracts from genuine accounts and raises questions about their authenticity. Furthermore, genuine accounts may be mistakenly reported as spam in Meta’s search for bots, which increases the possibility of account suspension and bans for those containing pornographic content.

Unfortunately, there isn’t a simple fix for the bot issue. “They’re just always coming up with new schemes and finding new ways around [moderation],” Narang claimed. The money and, thus, the crowd are the constant targets of scammers. More advanced bots targeting a younger audience on TikTok are putting relatively plausible commentary on Taylor Swift videos, according to Narang, while porn bots on Instagram have advanced to the point where they post absurd things to evade censors.

They’ll go there as well, because social media’s next great thing is bound to appear sooner or later. Narang stated, “These scammers will have incentives as long as there is money to be made.”